By ATS Staff - June 9th, 2020

Artificial Intelligence Latest Technologies Machine Learning

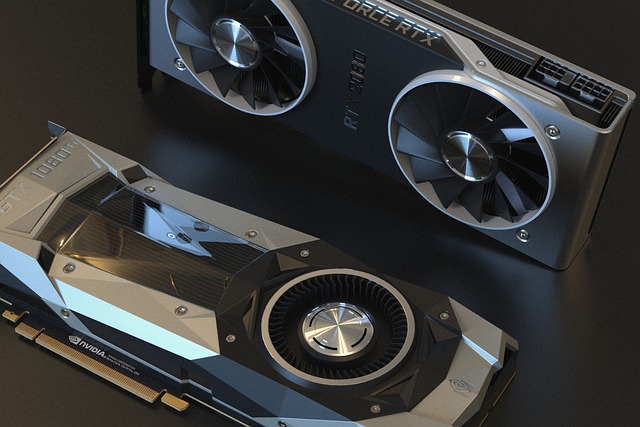

As machine learning (ML) becomes increasingly prominent across industries, having the right hardware is crucial for training models efficiently. One of the most important components in this setup is the Graphics Processing Unit (GPU). GPUs excel at parallel processing, which makes them ideal for machine learning tasks like deep learning, data processing, and model training. Choosing the right GPU can significantly impact the speed and effectiveness of your projects. Here are the key factors to consider when purchasing a GPU for machine learning.

1. Compute Power (CUDA Cores and Tensor Cores)

The number of CUDA cores (Compute Unified Device Architecture cores) directly influences the GPU’s ability to perform parallel computations, a critical feature in training deep learning models. In NVIDIA GPUs, CUDA cores handle general parallel computing tasks, while Tensor Cores (available in NVIDIA’s high-end GPUs) are designed specifically for accelerating deep learning computations like matrix multiplications, which are essential in neural networks.

GPUs with Tensor Cores, such as the NVIDIA RTX and A100 series, dramatically boost the speed of deep learning models. If your machine learning workload includes a lot of deep learning models, choosing a GPU with Tensor Cores is a wise investment.

2. Memory Size (VRAM)

For most machine learning tasks, the amount of Video RAM (VRAM) on your GPU is another critical factor. Deep learning models, particularly those using large datasets, can consume vast amounts of memory. If the dataset size exceeds your GPU’s memory, training will slow down or halt as the GPU struggles to process the data.

Generally, you should aim for a GPU with at least 8GB of VRAM for smaller models and datasets. For larger models, or if you're working with image data (like in computer vision tasks), you may need 12GB to 24GB or more. NVIDIA's A100 and RTX 3090, for example, come with larger memory capacities that are suited for handling heavy workloads.

3. FP16 vs. FP32 Support

Deep learning tasks often require a balance between speed and precision. Most traditional GPUs support 32-bit floating-point precision (FP32), which is accurate but slower. GPUs that support 16-bit floating-point precision (FP16), such as NVIDIA’s Turing and Ampere architectures, can perform calculations faster without significantly sacrificing accuracy.

If your machine learning tasks can tolerate reduced precision, choosing a GPU with robust FP16 support will result in faster training times. This feature is particularly helpful in training models with large datasets or complex architectures.

4. Compatibility with Popular Machine Learning Frameworks

Before purchasing a GPU, ensure it is compatible with your preferred machine learning frameworks, such as TensorFlow, PyTorch, or Keras. NVIDIA GPUs, for instance, come with CUDA and cuDNN libraries, which are optimized for deep learning and are widely supported by most frameworks. AMD GPUs, while powerful, have less support for CUDA, which can limit their usability in machine learning unless you work specifically with ROCm (Radeon Open Compute), which is less common.

Check for driver and software compatibility to avoid technical issues that could slow down your workflow.

5. Budget

GPUs can be expensive, and high-end models like the NVIDIA A100, RTX 4090, or TITAN series can cost thousands of dollars. However, not all projects require top-tier GPUs. For smaller or medium-sized projects, a more affordable option like the NVIDIA RTX 3060 or AMD Radeon RX 6800 XT can provide excellent performance without breaking the bank.

Understanding your specific needs will help you balance performance and cost. If your workload is light or you are just starting out, mid-range GPUs with 8GB-12GB of VRAM can often suffice.

6. Energy Consumption and Cooling

High-performance GPUs consume significant power and generate heat. Ensure your computer has a power supply unit (PSU) that can handle the wattage requirements of your GPU. Some GPUs like the NVIDIA RTX 3090 can require up to 350 watts or more, meaning you may need a PSU with a capacity of at least 750W.

Additionally, make sure your system has adequate cooling. GPUs working on heavy machine learning tasks tend to heat up quickly, and inadequate cooling can lead to thermal throttling, reducing the performance and lifespan of your GPU.

7. Multi-GPU Setup and Scalability

If you anticipate that your projects will scale over time, consider GPUs that support multi-GPU setups using technologies like NVIDIA NVLink or AMD CrossFire. These setups allow multiple GPUs to work together, speeding up training and inference times for large machine learning models.

However, not all machine learning frameworks and tasks benefit from multi-GPU setups. Research whether your projects would effectively scale with multiple GPUs before investing in this type of configuration.

8. Availability and Market Conditions

In recent years, GPUs have faced supply shortages due to increased demand in gaming, cryptocurrency mining, and AI research. This has driven up prices and limited availability. Before buying a GPU, it’s crucial to research the current market and consider waiting for restocks or deals if the prices seem inflated.

9. Cloud-Based GPUs vs. On-Premise

Finally, consider whether you need to purchase a GPU at all. Many cloud providers offer GPU instances that can be rented for short or long-term use. Services like AWS, Google Cloud, and Microsoft Azure provide access to high-end GPUs on-demand, allowing you to avoid the upfront costs of purchasing hardware.

Cloud-based GPUs are particularly useful for large-scale projects that require infrequent but intensive GPU use. However, if you’re running machine learning experiments frequently or need 24/7 access to a GPU, an on-premise setup might be more cost-effective in the long run.

Conclusion

When choosing a GPU for machine learning, it's essential to consider the compute power, memory capacity, precision support, compatibility with frameworks, budget, and cooling requirements. Your decision should also factor in whether multi-GPU setups or cloud-based alternatives suit your specific project needs. By understanding the key features and limitations of different GPU options, you can make an informed choice that aligns with your machine learning goals and budget.

Popular Categories

Agile 2 Android 2 Artificial Intelligence 47 Blockchain 2 Cloud Storage 3 Code Editors 2 Computer Languages 11 Cybersecurity 8 Data Science 13 Database 5 Digital Marketing 3 Ecommerce 3 Email Server 2 Finance 2 Google 3 HTML-CSS 2 Industries 6 Infrastructure 2 iOS 2 Javascript 5 Latest Technologies 41 Linux 5 LLMs 11 Machine Learning 32 Mobile 3 MySQL 2 Operating Systems 3 PHP 2 Project Management 3 Python Programming 24 SEO - AEO 5 Software Development 40 Software Testing 3 Web Server 6 Work Ethics 2